News, news analysis, and commentary on the latest trends in cybersecurity technology.

Companies Rely on Multiple Methods to Secure Generative AI Tools

To protect their own and their customers' data, organizations are exploring different approaches to guard against the unwanted effects of using AI.

As more organizations adopt generative artificial intelligence (AI) technologies — to craft pitches, complete grant applications, and write boilerplate code — security teams are realizing the need to address a new question: How do you secure AI tools?

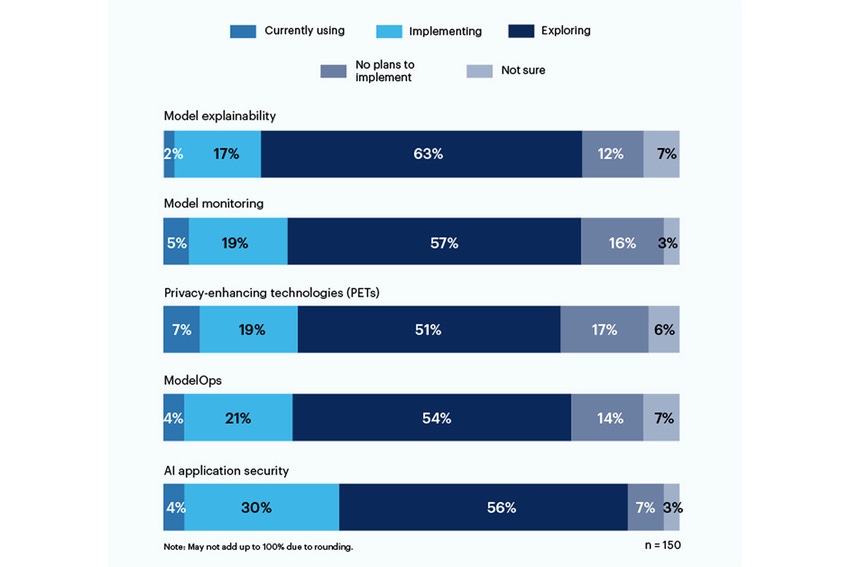

One-third of respondents in a recent survey from Gartner reported either using or implementing AI-based application security tools to address the risks posed by the use of generative AI in their organizations.

Privacy-enhancing technologies (PETs) show the greatest current use, at 7% of respondents, with a solid 19% of companies implementing it. This category includes ways to protect personal data, such as homomorphic encryption, AI-generated synthetic data, secure multiparty computation, federated learning, and differential privacy. However, another 17% of respondents also said they have no plans to implement PETs in their environments.

Only 19% said they are using or implementing tools for model explainability, but there is significant interest (56%) among the respondents in exploring and understanding these tools to address generative AI risk. Explainability, model monitoring, and AI application security tools can all be used on open source or proprietary models to achieve the trustworthiness and reliability enterprise users need, according to Gartner.

Risks that the respondents said they are most concerned about include incorrect or biased outputs (58%) and vulnerabilities or leaked secrets in AI-generated code (57%). Significantly, 43% also cited potential copyright or licensing issues arising from AI-generated content as top risks to their organizations.

"There is still no transparency about [what] data models are training on, so the risk associated with bias and privacy is very difficult to understand and estimate," a C-suite executive wrote in response to the Gartner survey.

In June, the National Institute of Standards and Technology (NIST) launched a public working group to help address that issue, based on its AI Risk Management Framework from January. As the Gartner data shows, companies are not waiting for NIST directives.

About the Author(s)

You May Also Like

Beyond Spam Filters and Firewalls: Preventing Business Email Compromises in the Modern Enterprise

April 30, 2024Key Findings from the State of AppSec Report 2024

May 7, 2024Is AI Identifying Threats to Your Network?

May 14, 2024Where and Why Threat Intelligence Makes Sense for Your Enterprise Security Strategy

May 15, 2024Safeguarding Political Campaigns: Defending Against Mass Phishing Attacks

May 16, 2024

Black Hat USA - August 3-8 - Learn More

August 3, 2024Cybersecurity's Hottest New Technologies: What You Need To Know

March 21, 2024