The Dark Side of AI

Sophisticated fraudsters are exploiting ChatGPT and CAPTCHAs to evade enterprise security defenses.

New AI tools offer easier and faster ways for people to get their jobs done — including cybercriminals. AI makes launching automated attacks more efficient and accessible.

You've likely heard of several ways threat actors are using ChatGPT and other AI tools for nefarious purposes. For example, it's been proved that generative AI can write successful phishing emails, identify targets for ransomware, and conduct social engineering. But what you probably haven't heard is how attackers are exploiting AI technology to directly evade enterprise security defenses.

While there are policies that restrict the misuse of these AI platforms, cybercriminals have been busy figuring out how to circumvent these restrictions and security protections.

Jailbreaking ChatGPT Plus and Bypassing ChatGPT's API Protections

Bad actors are jailbreaking ChatGPT Plus in order to use the power of GPT-4 for free without all of the restrictions and guardrails that attempt to prevent unethical or illegal use.

Kasada's research team has uncovered that people are also gaining unauthorized access to ChatGPT's API by exploiting GitHub repositories, like those found on the GPT jailbreaks Reddit thread, to eliminate geofencing and other account limitations.

Credential-stuffing configs can also be modified with ChatGPT if users find the right OpenAI bypasses from resources like GitHub's gpt4free, which tricks OpenAI's API into believing it's receiving a legitimate request from websites with paid OpenAI accounts, such as You.com.

These resources make it possible for fraudsters to not only launch successful account takeover (ATO) attacks against ChatGPT accounts but also to use jailbroken accounts to assist with fraud schemes across other sites and applications.

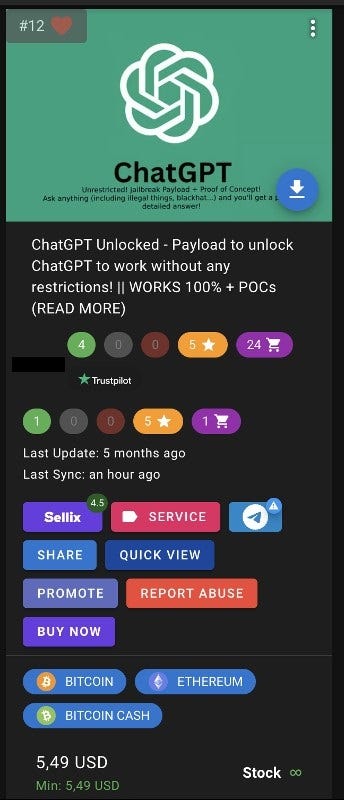

Jailbroken and stolen ChatGPT Plus accounts are actively being bought and sold on the Dark Web and other marketplaces and forums. Kasada researchers have found stolen ChatGPT Plus accounts for sale priced as low as $5, which is, effectively, a 75% discount.

Source: Kasada

Stolen ChatGPT accounts have major consequences for account owners and other websites and applications. For starters, when threat actors gain access to a ChatGPT account, they can view the account's query history, which may include sensitive information. Additionally, bad actors can easily change account credentials, making the original owner lose all access.

More critically, it also sets the stage for further, more sophisticated fraud to take place, as the guardrails are removed with jailbroken accounts, making it easier for cybercriminals to leverage the power of AI to carry out sophisticated targeted automated attacks on enterprises.

Bypassing CAPTCHAs with AI

Another way threat actors are using AI to exploit enterprise defenses is by evading CAPTCHAs. While CAPTCHAs are universally hated, they still secure 2.5 million — more than one-third — of all Internet sites.

New advancements in AI make it easy for cybercriminals to use AI to bypass CAPTCHAs. ChatGPT admitted that it could solve a CAPTCHA, and Microsoft recently announced an AI model that can solve visual puzzles.

Additionally, sites that rely on CAPTCHAs are increasingly susceptible to today's sophisticated bots that can bypass them with ease through AI-assisted CAPTCHA solvers, such as CaptchaAI, that are inexpensive and easy to find, posing a significant threat to online security.

Conclusion

Even with strict policies in place to try and prevent abuse on AI platforms, bad actors are finding creative ways to weaponize AI to launch attacks at scale. As defenders, we need greater awareness, collaborative efforts, and robust security designed to effectively fight AI-powered cyber threats, which will continue to evolve and advance at a faster pace than ever before.

About the Author

You May Also Like