Privacy & Security Concerns With AI Meeting Tools

Businesses need to find a balance between harnessing the benefits of AI assistants and safeguarding sensitive information — maintaining trust with employees and clients.

_Federico_Caputo_Alamy.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

COMMENTARY

AI-powered meeting assistants like Otter.ai, Zoom AI Companion, and Microsoft 365 Copilot promise increased employee productivity and a reliable record of discussions by attending online meetings alongside or instead of participants. AI assistants can record video and transcribe audio, summarize notes and actions, provide analytics, and even coach speakers on more effective communication. But do the benefits outweigh the associated security and privacy risks?

Consider this: If a stranger appeared in a meeting room, intent on recording the conversation and using that information for unknown purposes, would that person be allowed to proceed unchallenged? Would the same conversation with the same level of candor occur? The answer, of course, is no. So why are businesses allowing AI meeting assistants to eavesdrop on conversations and collect potentially sensitive data?

Content Privacy

These applications pose a significant privacy and security risk to corporate information and those being recorded. The potential for misuse is a pressing concern that many organizations still need to consider how best to manage. This technology is spreading faster than awareness of its risks, underscoring the need for immediate action.

The first victim of AI eavesdropping might be the quality of the conversation. Employees who speak candidly about co-workers, managers, the company and its customers, or investors might find themselves disciplined based on the assistant's transcript, which could easily be taken out of context. In turn, the fear of how recordings might be used could also stymy innovation and transparency.

Other risks include employees feeling obliged to consent against their will because a more senior colleague wants to use an assistant, and an overreliance on the veracity of transcriptions, which may contain mistakes that, unchecked, become a record of fact.

Online meetings frequently also include discussion of personal data, intellectual property, business strategy, unreleased information about a public company, or information about security vulnerabilities, all of which could cause legal, financial, and reputational headaches, if leaked. Existing tools to stop leaks, such as data loss prevention systems, would not prevent the data from leaving the organization's control.

There is considerable potential for unauthorized access to or misuse of recorded conversations. Though enterprise solutions might offer some control through administrative safeguards, third-party applications often have fewer protections, and it may not always be clear how or where a provider will store data, for how long, who will have access to it, or how the service provider might use it.

Privacy and Security Often an Afterthought

Some transcription tools may allow the provider to ingest and use the data for other purposes, such as training the algorithm. Users of virtual meeting provider Zoom complained last year, after an update to Zoom's terms of service led to concerns that customer data would be used to train the company's AI algorithm. Zoom was forced to update its terms and clarify how and when customer data would be used for product improvement purposes.

Zoom's past data privacy issues serve as a stark reminder of the potential consequences. A settled Federal Trade Commission investigation Federal Trade Commission investigation and a settled $86 million class-action privacy lawsuit demonstrated that fast-growing startups can overlook data privacy and security.

Companies in this space may also end up inadvertently making themselves a target for hackers intent on getting access to thousands of hours of corporate meetings. Any leak, regardless of content, would be reputationally damaging for both the provider and customer.

The AI revolution does not stop in online meetings though. Gadgets, such as Humane's wearable AI Pin, take the assistant concept a step further and can record any interaction throughout the day and process the content. In such cases, it seems even less likely that users of the pin will continually ask other parties for consent each time, easily exposing sensitive conversations.

Legal Considerations

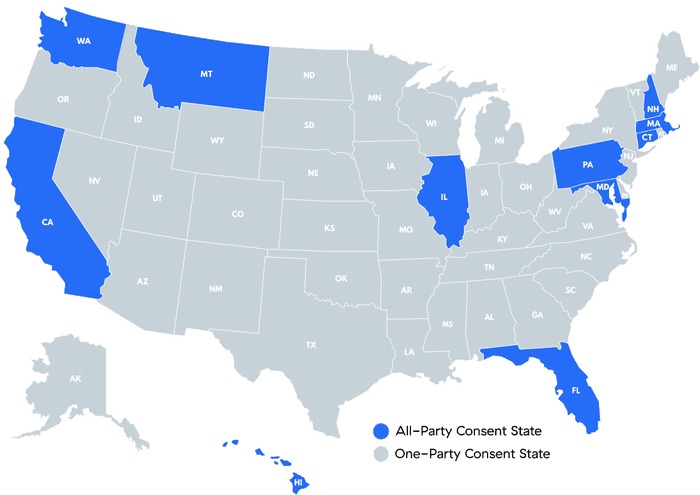

The key legal consideration with regards to AI assistants is consent. Most AI assistants include a clear and conspicuous recording consent mechanism to comply with laws like the California Invasion of Privacy Act, which makes it a crime to record a person's voice without their knowledge or consent. However, legal requirements vary: 11 states in the US, including California, have "all-party" consent laws, requiring all participants to consent to be recorded, while the remainder have "one-party" consent laws, where only one participant — typically the one doing the recording — needs to consent.

Map of All-Party and One-Party Consent States

By taking these proactive steps, businesses can harness the benefits of AI assistants, while safeguarding their sensitive information and maintaining trust with employees and clients. By preventing incidents before they occur and ensuring that the integration of AI in meetings enhances productivity without compromising privacy and security, we can improve and revolutionize team collaboration.

Participants in online work meetings might assume privacy, but this often depends on the company's policies and the jurisdiction. In the US, workplace privacy typically is limited by company policies. In contrast, the European Union and its member states, particularly Germany and France, offer stronger privacy protections in the workplace.

Noncompliance with recording laws can lead to criminal liability, which is rarely enforced, and civil damages and penalties, which are often litigated. More than 400 cases related to unlawful recordings have been filed in California alone this year, with thousands more in arbitration, though none are thought to be related to AI assistants — yet.

Managing Risk

As AI assistants become increasingly integrated into both professional and personal spheres, leaders cannot overstate the urgency to address privacy and security concerns. To manage the risks, companies must quickly assemble dedicated teams to assess emerging technologies, and document policies and socialize them across the organization.

A comprehensive policy should outline the authorized use of AI assistants, consent requirements, data management and data protection protocols, and clear consequences for violations. Continuous updates to these policies are essential as technology evolves, and in parallel, there is a critical need to educate employees about potential risks and encourage a culture of vigilance.

About the Author

You May Also Like