Is CVSS the Right Standard for Prioritization?

More than 55% of open source vulnerabilities are rated high or critical. To truly understand a vulnerability and how it might affect an organization or product, we need much more than a number.

The software development industry's increasing reliance on open source components has led to a rise in awareness of open source security vulnerabilities, resulting in a drastic increase in the number of discovered open source vulnerabilities, as WhiteSource's annual report, "The State of Open Source Security Vulnerabilities," shows.

Faced with a continuously growing number of open source vulnerabilities in our codebase, how can software development organizations keep up with the long list of security alerts to make sure they are remediating the most urgent issues first?

Prioritization of security alerts has become an important part of vulnerability management, and many organizations look to the CVSS (Common Vulnerability Scoring System) score of vulnerabilities as an objective standard for prioritizing their open source security vulnerabilities. However, data in the WhiteSource research report shows that relying on the CVSS rating for prioritization will get organizations only so far.

What's in a CVSS Score?

Since 2005, the CVSS from the good folks at FIRST (the Forum of Incident Response and Security Teams) has become the standard for assessing the severity of a vulnerability. In the CVSS, researchers examine a vulnerability's base, temporal, impact, and environmental metrics to gain an understanding of how hard an exploit is to carry out and the level of damage it can cause if the attacker is successful.

The CVSS has continued to evolve over the years with new and updated versions as the community works to improve the scoring system. New parameters have been added, such as the Scope and User Interaction metrics that came in CVSS v3 when it was released in 2015, which provide more information than what was available in v2. More recently, CVSS v3.1 was released to "clarify and improve the existing standard."

However, even as the changes made in v3 provided valuable details for helping organizations better ascertain the severity of a given vulnerability, there was one significant consequence from shifting to the new standard.

Were All Vulnerabilities Created Equal? CVSS Ratings Over Time

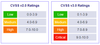

The WhiteSource research report analyzed the CVSS ratings of more than 10,000 open source vulnerabilities that were reported between 2016 and 2019 to compare the severity breakdown of vulnerabilities rated by CVSS v2, v3.0, and v3.1.

Data shows that v3.0 and v3.1 scores are significantly higher than the v2 scores. For instance, a vulnerability with a 7.6 CVSS under v2 may find itself classified as a 9.8 by v3.x standards.

Comparing v2.0 rating standards to v3.0 rating standards provides partial explanation of the dramatic shift:

Source: WhiteSource

Critical and High Severity Vulnerabilities Are on the Rise

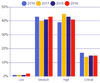

After showing that CVSS v3.x scores are generally higher, the research report goes on to compare the severity distribution of vulnerabilities rated with a CVSS v3 score to better understand the scope of high and critical severity open source vulnerabilities.

Source: WhiteSource

The numbers for 2019 show that over 55% of CVEs were rated as high or critical. The study also reported that open source vulnerabilities rose by 50% in 2019, meaning that not only are more CVEs classified as high and critical, but there are many more vulnerabilities to contend with overall.

The first and most prominent change that the recently released CVSS v3.1 presented was that it measured severity, not risk. This change was supposed to provide users with a more comprehensive and precise context and shared understanding of a vulnerability's severity score to allow more users to leverage the information. The data shows that as the scoring method continues to evolve, developers and security teams are forced to address a large volume of high and critical severity vulnerabilities with limited tools for prioritization.

There are a number of reasons for the imbalance in the distribution of severity scores. These range from the security community's heightened focus on high and critical issues to the difficulty in creating CVEs, which are time-consuming and cause some to avoid them for lower-severity issues. The question remains: How can development and security teams address this imbalance?

Should CVSS Ratings Figure into Vulnerability Remediation Prioritization

As the security landscape continues to evolve, we know that to truly understand a vulnerability and how it might affect an organization or product, we need much more than a number.

V3.1 is based on the understanding that the CVSS score shouldn't be taken as the only parameter. Factors such as vulnerable dependencies or network architecture and configuration, to name a few, require consideration when assessing the risk of a security vulnerability in our assets.

It's still up to DevSecOps teams to ensure that their organization's software inventory, cloud environments, and network structure are all taken into consideration as part of the assessment of vulnerabilities.

Related Content:

Check out The Edge, Dark Reading's new section for features, threat data, and in-depth perspectives. Today's featured story: "Election Security in the Age of Social Distancing."

Check out The Edge, Dark Reading's new section for features, threat data, and in-depth perspectives. Today's featured story: "Election Security in the Age of Social Distancing."

About the Author

You May Also Like