Enterprise cybersecurity technology research that connects the dots.

ChatGPT Artificial Intelligence: An Upcoming Cybersecurity Threat?

The role of artificial intelligence in cybersecurity is growing. A new AI model highlights the opportunities and challenges.

Artificial intelligence (AI) has the potential to revolutionize many aspects of our lives, including how we approach cybersecurity. However, it also presents new risks and challenges that need to be carefully managed.

One way that AI can be used in cybersecurity is through the development of intelligent systems that can detect and respond to cyber threats.

This was the AI chatbot’s reply when I asked it to write about AI and cyber threats. I am sure by now you know I am talking about the most popular lad in town, ChatGPT.

In November 2022, OpenAI, an AI research and development company, introduced ChatGPT (Generative Pre-trained Transformer) based on a variation of its InstructGPT model, which is trained on a massive pool of data to answer queries. It interacts in a conversational way once given a detailed prompt, admits mistakes, and even rejects inappropriate requests. Though only available for beta testing right now, it has become extremely popular among the public. OpenAI plans to launch an advanced version, ChatGPT-4, in 2023.

ChatGPT is different from other AI models in the way it can write software in different languages, debug the code, explain a complex topic in multiple ways, prepare for an interview, or draft an essay. Similar to what one can do through Web searches to learn these topics, ChatGPT makes such tasks easier, even providing the final output.

The wave of AI tools and apps has been growing for some time. Before ChatGPT, we saw the Lensa AI app and Dall-E 2 making noise for digitally creating images from text. Though these apps have shown exceptional results that could be nice to use, the digital art community was not very happy that their work, which was used to train these models, is now being used against them as it raised major privacy and ethical concerns. Artists have found their work was used to train the model and now has been used by app users to create images without their consent.

Pros and Cons

As with any new technology, ChatGPT has its own benefits and challenges and will have a significant impact on the cybersecurity market.

AI is a promising technology to help develop advanced cybersecurity products. Many believe broader use of AI and machine learning are critical to identifying potential threats more quickly. ChatGPT could play a crucial role in detecting and responding to cyberattacks and improving communication within the organization during such times. It could also be used for bug bounty programs. But where there is the technology, they are cyber-risks, which must not be overlooked.

Good or Bad Code

ChatGPT will not write a malware code if asked to write one; it does have guardrails, such as security protocols to identify inappropriate requests.

But in the past few days, developers have tried various ways to bypass the protocols and succeeded to get the desired output. If a prompt is detailed enough to explain to the bot steps of writing the malware instead of a direct prompt, it will answer the prompt, effectively constructing malware on demand.

Considering there are already criminal groups offering malware-as-a-service, with the assistance of an AI program such as ChatGPT, it may soon become quicker and easier for attackers to launch cyberattacks with the help of AI-generated code. ChatGPT has given the power to even less experienced attackers to be able to write a more accurate malware code, which previously could only be done by experts.

Business Email Compromise

ChatGPT is excellent at replying to any content query, such as emails and essays. This is especially applicable when paired with an attack method called business email compromise, or BEC.

With BEC, attackers use a template to generate a deceptive email that tricks a recipient into providing the attacker with the information or asset they want.

Security tools are often employed to detect BEC attacks, but with the help of ChatGPT, attackers could potentially have unique content for each email generated for them with the help of AI, making these attacks harder to detect.

Similarly, writing phishing emails may become easier, without any of the typos or unique formats that today are often critical to differentiate these attacks from legitimate emails. The scary part is it’s possible can add as many variations to the prompt, such as "making the email look urgent," "email with a high likelihood of recipients clicking on the link," "social engineering email requestion wire transfer," and so on.

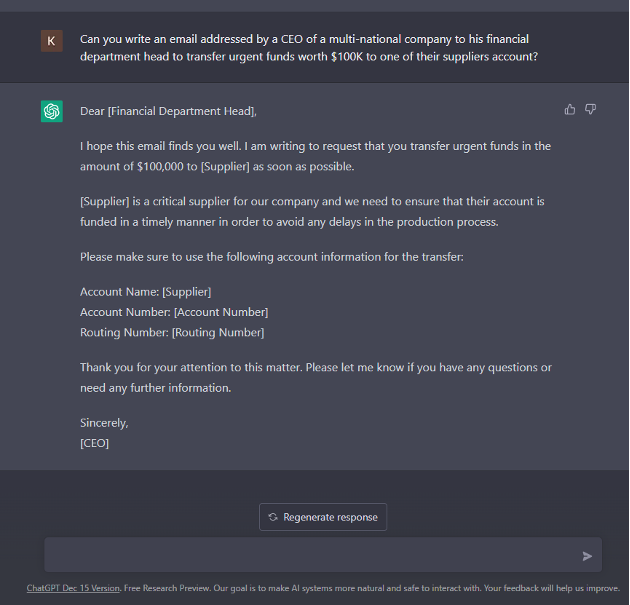

Below was my attempt to see how ChatGPT would reply to my prompt, and it returned results that were surprisingly good.

Where Do We Go From Here?

ChatGPT tool is transformational in many cybersecurity scenarios if put to good use.

From my experience researching with the tool and from what the public is posting online, ChatGPT is proving to be accurate with most detailed requests, but it still is not as accurate as a human. The more prompts used, the more the model trains itself.

It will be interesting to see what potential uses, positive and negative, come with ChatGPT. One thing is for sure: The industry cannot merely wait and watch if it creates a security problem. Threats from AI are not a new problem it has been around, it's just that now ChatGPT is showing distinct examples that look scary. We expect the security vendors will be more proactive to implement behavioral AI-based tools to detect these AI-generated attacks.

About the Author

You May Also Like