News, news analysis, and commentary on the latest trends in cybersecurity technology.

Open Source LLM Projects Likely Insecure, Risky to Use

New LLM-based projects typically become successful in a short period of time, but the security posture of these generative AI projects are very low, making them extremely unsafe to use.

There is a lot of interest in integrating generative AI and other artificial intelligence applications into existing software products and platforms. However, from a security standpoint these AI projects are fairly new and immature, exposing organizations using these applications to various security risks, according to recent analysis by software supply chain security company Rezilion.

Since ChatGPT’s debut earlier this year, more than 30,000 open source projects now use GPT 3.5 on GitHub, which highlights a serious software supply chain concern: How secure are these projects that are being integrated left and right?

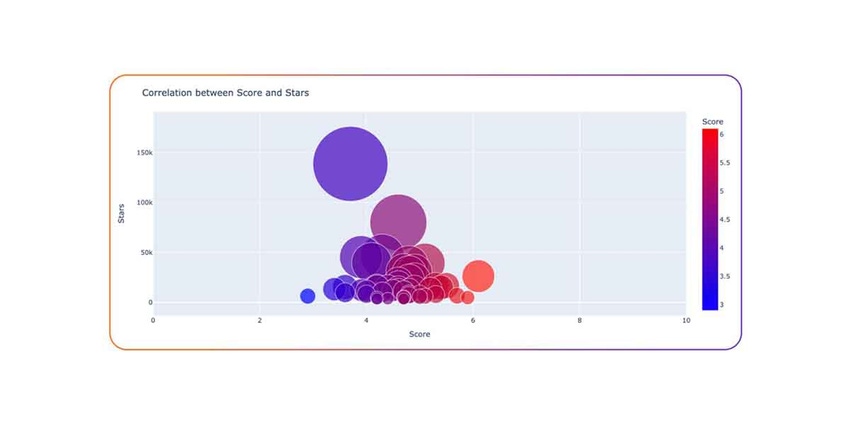

Rezilion’s team of researchers attempted to answer that question by analyzing the 50 most popular Large Language Model (LLM)-based projects on GitHub – where popularity was determined by how many stars the project has. The project's security posture was measured by the OpenSSF Scorecard score. The Scorecard tool, from the Open Source Security Foundation, assesses the project repository on various factors, such as the number of vulnerabilities it has, how frequently the code is being maintained, its dependencies, and the presence of binary files, to calculate the score. The higher the number, the more secure the code.

The researchers mapped the project’s popularity (size of the bubble, y-axis) and security posture (x-axis). None of the projects analyzed scored higher than 6.1 – the average score was 4.6 out of 10 – indicating a high level of security risk associated with these projects, Rezilion said. In fact, Auto-GPT, the most popular project (with almost 140,000 stars), is less than 3 months old and has the third-lowest score of 3.7, making it an extremely risky project from a security perspective.

When organizations are considering which open source projects to integrate into their codebases or which ones to work with, they consider factors such as whether the project is stable, currently supported, actively maintained, and the number of people actively working on the project. Organizations have to consider several types of risks, such as trust boundary risks, data management risks, and inherent model risks.

"When a project is new, there are more risks around the stability of the project, and it’s too soon to tell whether the project will keep evolving and remain maintained,” the researchers wrote in their analysis. “Most projects experience strong growth in their early years before hitting a peak in community activity as the project reaches full maturity, then the level of engagement tends to stabilize and remain consistent.”

The age of the project was relevant, Rezilion researchers said, noting that most of the projects in the analysis were between 2 and 6 months old. When the researchers looked at both the age of the project and Scorecard score, the age-score combination that was the most common was projects that are 2 months old and have a Scorecard score of 4.5 to 5.

"Newly-established LLM projects achieve rapid success and witness exponential growth in terms of popularity,” the researchers said. “However, their Scorecard scores remain relatively low.”

Development and security teams need to understand the risks associated with adopting any new technologies and make a practice of evaluating them prior to use.

About the Author(s)

You May Also Like

Beyond Spam Filters and Firewalls: Preventing Business Email Compromises in the Modern Enterprise

April 30, 2024Key Findings from the State of AppSec Report 2024

May 7, 2024Is AI Identifying Threats to Your Network?

May 14, 2024Where and Why Threat Intelligence Makes Sense for Your Enterprise Security Strategy

May 15, 2024Safeguarding Political Campaigns: Defending Against Mass Phishing Attacks

May 16, 2024

Black Hat USA - August 3-8 - Learn More

August 3, 2024Cybersecurity's Hottest New Technologies: What You Need To Know

March 21, 2024