Why It’s Insane To Trust Static Analysis

If you care about achieving application security at scale, then your highest priority should be to move to tools that empower everyone, not just security experts.

In a previous blog, Jason Schmitt, the vice president and general manager of HP Fortify, promotes the static (Oops… status) quo by spreading some fear, uncertainty, and doubt about the newest type of application security tool known as Interactive Application Security Testing (IAST). Vendors selling static analysis tools for security have been overclaiming and under- delivering for over a decade. It’s time to stop misleading the public.

Jason seems to have reacted strongly to my observation that it’s a problem if you need security experts every time you want to run a security tool. What he doesn’t seem to understand is that this creates an expensive, wasteful, scale-killing bottleneck. Everyone who attempts to use static and dynamic analysis tools has a team of experts onboarding apps, tailoring scans, and triaging false positives.

[Read Jason’s opposing view in its entirety in The Common Core of Application Security.]

Of course we need experts, but they’re a scarce resource. We need them making thoughtful and strategic decisions, conducting threat modeling and security architecture efforts, and turning security policies into rules that can be enforced across the lifecycle by automation. Experts should be “coaches and toolsmiths” -- not babysitting tools and blocking development. Static tools, and other tools that aren’t continuous and require experts, simply don’t fit into my thinking about what I believe an application security program should look like.

In search of a unified security product

Automating application security is absolutely critical, so let’s talk about some of the things vendors won’t tell you about static analysis using, as an example, Contrast, an interactive application security testing (IAST) product from Contrast Security, where I am CTO.

Contrast is a single agent that provides SAST, DAST, IAST, and runtime application self-protection (RASP) capabilities. Contrast works from inside the running application so it has full access to all the context necessary to be both fast and accurate. It applies analysis techniques selectively. For example, runtime analysis is amazing at injection flaws because it can track real data through the running application. Static analysis is good for flaws that tend to manifest in a single line of code, like hardcoded passwords and weak random numbers. And dynamic analysis is fantastic at finding problems revealed in HTTP requests and responses, like HTTP parameter pollution, cache control problems, and authentication weaknesses.

IAST uses all these techniques on the entire application, including libraries and frameworks, not just the custom code. So rather than deploying a mishmash of standalone SAST, DAST, WAF, and IDS/IPS tools, the combination of IAST and RASP in a single agent provides unified security from the earliest stage of development all the way through production.

Everyone knows SAST is inaccurate

If you care about the accuracy of security tools, you should check out the new OWASP Benchmark Project. The project is sponsored by DHS and has created a huge test suite to gauge the true effectiveness of all kinds of application security testing tools — over 21,000 test cases.

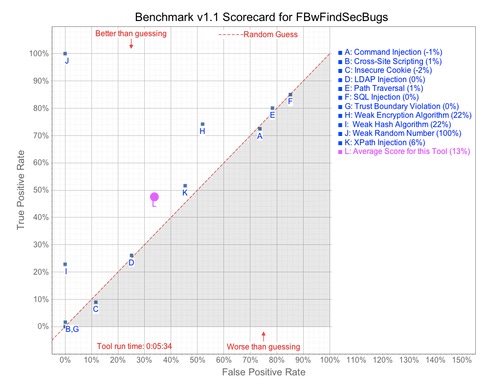

The Benchmark calculates an overall score for a tool based on both true positive rate and the false positive rate. This project is doing some real science on the speed and accuracy these tools. Here are the results for a popular open source static analysis tool called FindBugs (with the Security Plugin).

The Benchmark is designed to carefully test a huge number of variants of each vulnerability, to carefully measure the strengths and weaknesses of each tool. False positives are incredibly important, as each one takes time and expertise to track down. That’s why it is critically important to understand both true and false positive metrics when choosing a security tool.

The good news is that anyone can use the Benchmark to find out exactly what the strengths and weaknesses of their tools are. You don’t have to trust vendor claims. All you do is clone the OWASP Benchmark git repository, run your tool on it, and feed the report into the Benchmark’s scoring tool.

OWASP reports that the best static analysis tools score in the low 30’s (out of 100) against this benchmark. Dynamic analysis tools fared even more poorly. What jumps out is that static tools do very poorly on any type of vulnerability that involves data flow, particularly injection flaws. They do best on problems like weak random number generation that tend to be isolated to a single line of code.

SAST disrupts software development

There are two major problems with Jason’s criticisms of IAST performance. First, modern IAST is blazingly fast. But more importantly, you use IAST to find vulnerabilities during development and test, not production. So let’s talk about the metrics that matter during development and test.

Continuous, real-time feedback is a natural process fit for high-speed modern software development methodologies like Agile and DevOps. And since it’s a distributed approach, it works in parallel across all your applications.

On the other hand, static tools take hours or days to analyze a single application. And because you need experts, tons of RAM, and tons of CPU to analyze that single application, it’s very difficult to parallelize. I’ve seen this so many times before -- static ends up being massively disruptive to software development processes, resulting in the tool being shelved or used very rarely. When you get thousands of false alarms and each one takes between 10 minutes and several hours to track down, it’s impossible to dismiss the cost of inaccuracy.

SAST Coverage is an illusion

Static tools only see code they can follow, which is why modern frameworks are so difficult for them. Libraries and third-party components are too big to analyze statically, which results in numerous “lost sources” and “lost sinks” – toolspeak for “we have no idea what happened inside this library.” Static tools also silently quit analyzing when things get too complicated.

Try running a static analysis tool on an API, web service, or REST endpoint. The tool will run but it won’t find anything because it can’t understand the framework. And you’ll have no idea what code was and wasn’t analyzed. This false sense of security might be more dangerous than running no tool at all.

Unlike static tools that provide zero visibility into code coverage, with IAST you control exactly what code gets tested. You can simply use your normal development, test, and integration processes and your normal coverage tools. If you don’t have good test coverage, use a simple crawler or record a Selenium script to play on your CI server.

AppSec automation will empower everyone

If you care about achieving application security at scale, then your highest priority should be to move to tools that empower everyone, not just security experts. Check the OWASP Benchmark Project and find out the strengths and weaknesses of the tools you’re considering.

Whether legacy tool vendors like it or not, instrumentation will change application security forever. Consider what New Relic did to the performance market. Their agent uses instrumentation to measure performance highly accurately from within a running application. And it changed their industry from being dominated by tools for experts and PDF reports to one where everyone is empowered to do their own performance engineering. We can do the same for application security.

Related content:

What Do You Mean My Security Tools Don’t Work on APIs?!! by Jeff Williams

Software Security Is Hard But Not impossible by Jason Schmitt

About the Author(s)

You May Also Like

Is AI Identifying Threats to Your Network?

May 14, 2024Where and Why Threat Intelligence Makes Sense for Your Enterprise Security Strategy

May 15, 2024Safeguarding Political Campaigns: Defending Against Mass Phishing Attacks

May 16, 2024Why Effective Asset Management is Critical to Enterprise Cybersecurity

May 21, 2024Finding Your Way on the Path to Zero Trust

May 22, 2024

Black Hat USA - August 3-8 - Learn More

August 3, 2024Cybersecurity's Hottest New Technologies: What You Need To Know

March 21, 2024