Colorful abstract illustrated design of dots

Vulnerabilities & Threats

'MagicDot' Windows Weakness Allows Unprivileged Rootkit Activity'MagicDot' Windows Weakness Allows Unprivileged Rootkit Activity

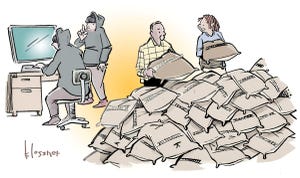

Malformed DOS paths in file-naming nomenclature in Windows could be used to conceal malicious content, files, and processes.

Keep up with the latest cybersecurity threats, newly discovered vulnerabilities, data breach information, and emerging trends. Delivered daily or weekly right to your email inbox.

.jpg?width=100&auto=webp&quality=80&disable=upscale)