New technical chatbot capabilities raise the promise that their help in threat modeling could free humans for more interesting work.

There's been a flood of news about OpenAI's new GPT-3 Chatbot. For all the very real critiques, it does an astounding and interesting job of producing reasonable responses. What does it mean for threat modeling? There's real promise that it will transform threat modeling as we know it.

For readability, I'll just call it "chatbot." The specific examples I use are from OpenAI's implementation, but we can think about this as a new type of technical capability that others will start to offer, and so I'll go beyond what we see today.

Let's start with what it can do, ask what can go wrong, see if we can manage those issues, and then evaluate.

What Chatbots Can Do

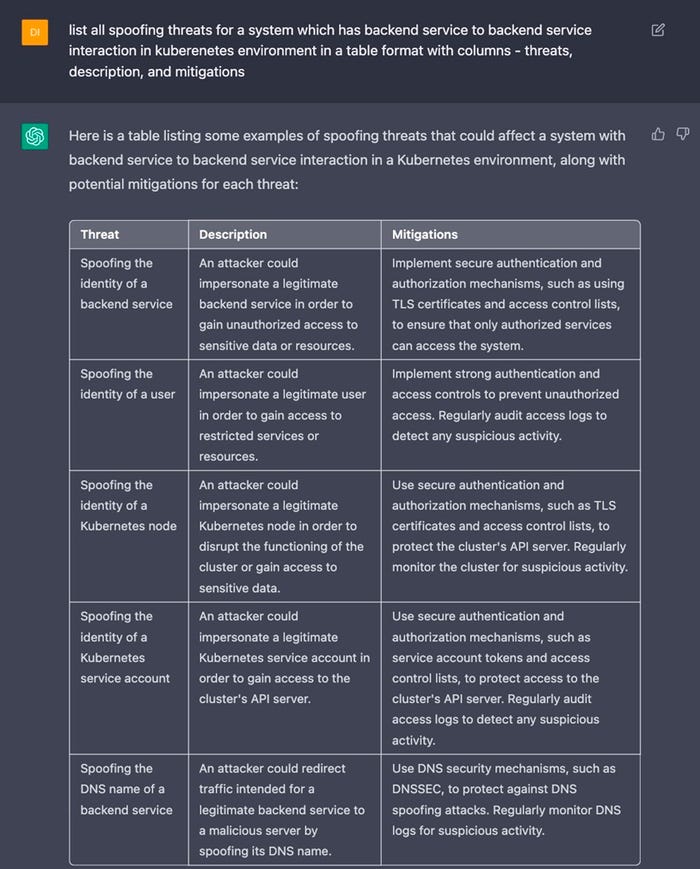

On the Open Web Application Security Project® (OWASP) Slack, @DS shared a screenshot where he asked it to "list all spoofing threats for a system which has back-end service to back-end service interaction in Kubernetes environment in a table format with columns — threats, description, and mitigations."

The output is fascinating. It starts "Here is a table listing some examples …" Note the switch from "all" to "some examples." But more to the point, the table isn't bad. As @DS says, it provided him with a base, saving hours of manual analysis work. Others have used it to explain what code is doing or to find vulnerabilities in that code.

Chatbots (more specifically here Large Language Models, including GPT-3) don't really know anything. What they do under the hood is pick statistically likely next words to respond to a prompt. What that means is they'll parrot the threats that someone has written about in their training data. On top of that, they'll use symbol replacement for something that appears to our anthropomorphizing brains to be reasoning by analogy.

When I created the Microsoft SDL Threat Modeling Tool, we saw people open the tool and be unsure what to do, so we put in a simple diagram that they could edit. We talked about it addressing "blank page syndrome." Many people run into that problem as they're learning threat modeling.

What Can Go Wrong?

While chatbots can produce lists of threats, they're not really analyzing the system that you're working on. They're likely to miss unique threats, and they're likely to miss nuance that a skilled and focused person might see.

Chatbots will get good enough, and that "mostly good enough" is enough to lull people into relaxing and not paying close attention. And that seems really bad.

To help us evaluate it, let's step way back, and think about why we threat model.

What Is Threat Modeling? What Is Security Engineering?

We threat model to help us anticipate and address problems, to deliver more secure systems. Engineers threat model to illuminate security issues as they make design trade-offs. And in that context, having an infinite supply of inexpensive possibilities seems far more exciting than I expected when I started this essay.

I've described threat modeling as a form of reasoning by analogy, and pointed out that many flaws exist simply because no one knew to look for them. Once we look in the right place, with the right knowledge, the flaws can be pretty obvious. (That's so important that making that easier is the key goal of my new book.)

Many of us aspire to do great threat modeling, the kind where we discover an exciting issue, something that'll get us a nice paper or blog post, and if you just nodded along there … it's a trap.

Much of software development is boring management of a seemingly unending set of details, such as iterating over lists to put things into new lists, then sending them to the next stage in a pipeline. Threat modeling, like test development, can be useful because it gives us confidence in our engineering work.

When Do We Step Back?

Software is hard because it's so easy. The apparent malleability of code makes it easy to create, and it's hard to know how often or how deeply to step back. A great deal of our energy in managing large software projects (including both bespoke and general-use software) goes to assessing what we're doing, and getting alignment on priorities — all these other tasks are done occasionally, slowly, rarely, because they're expensive.

It's not what the chatbots do today, but I could see similar software being tuned to report how much any given input changes its models. Looking across software commits, discussions in email and Slack, tickets, and helping us assess its similarity to other work could profoundly change the energy needed to keep projects (big or small) on track. And that, too, contributes to threat modeling.

All of this frees up human cycles for more interesting work.

About the Author(s)

You May Also Like

The fuel in the new AI race: Data

April 23, 2024Securing Code in the Age of AI

April 24, 2024Beyond Spam Filters and Firewalls: Preventing Business Email Compromises in the Modern Enterprise

April 30, 2024Key Findings from the State of AppSec Report 2024

May 7, 2024Is AI Identifying Threats to Your Network?

May 14, 2024

Black Hat USA - August 3-8 - Learn More

August 3, 2024Cybersecurity's Hottest New Technologies: What You Need To Know

March 21, 2024