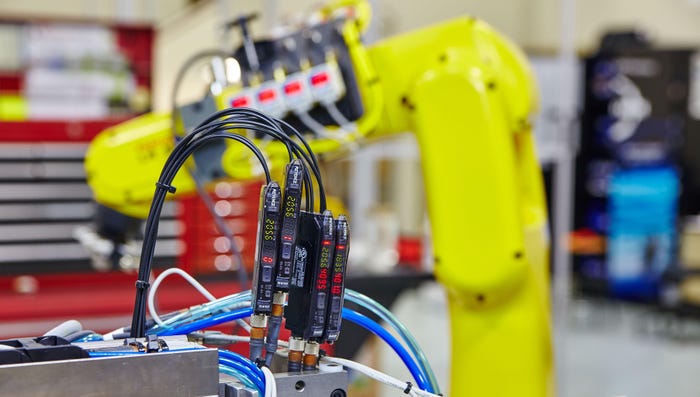

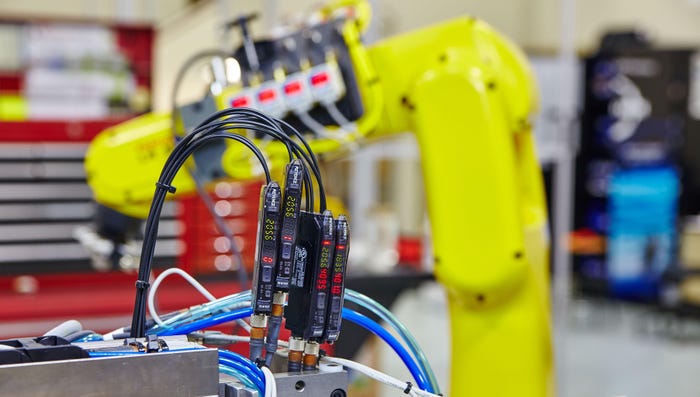

Yellow industrial robotic arm and electrical components in automated facility

Endpoint Security

2023: A 'Good' Year for OT Cyberattacks2023: A 'Good' Year for OT Cyberattacks

Attacks increased by "only" 19% last year. But that number is expected to grow significently.

Keep up with the latest cybersecurity threats, newly discovered vulnerabilities, data breach information, and emerging trends. Delivered daily or weekly right to your email inbox.

.jpg?width=100&auto=webp&quality=80&disable=upscale)