You have to consider the human factor when you’re designing security interventions, because the best intentions can have completely opposite consequences.

In security we have a saying: “Why do cars have brakes? So they can stop? No, so they can go fast!” Practiced badly, security can bring successful software projects to a screeching halt. Creating “security gates” for software projects, compliance reviews, and reporting phantom “false alarm” risks can kill a healthy relationship between security and development teams. But security doesn’t have to be about hindering business. Done right, application security programs are designed to get people working together in a way that is compatible with software development. The goal is to find solutions that allow business to go fast and be secure.

You know how a squirrel tries to get out the way of a car? Jump left. Zip right. Run directly under the tire. That’s how squirrels attempt to deal with a risk introduced by new technology (the car) using the predator avoidance mechanism designed by nature. It doesn’t work well because squirrels are marginally worse than humans at judging technology risk. Noted security expert Bruce Schneier argues that humans are fundamentally “bad at accurately assessing modern risk. We’re designed to exaggerate spectacular, strange, and rare events, and downplay ordinary, familiar, and common ones.”

But, if you really think about it, Mother Nature is just slow. We’re bad at IT risk because our defenses mostly evolved 100,000 years ago in small family groups in Africa. Given enough time, evolution adapts to new risks just fine. Species create remarkable defenses that are compatible with each organism. The question is: Can we speed up this process so that we can adapt more quickly to a rapidly changing technology risk environment?

Security and business evolve together

Usually, the software world just adopts new technology without thinking much about security. I’ve done hundreds of security reviews of products that were purchased without doing any security analysis -- and it’s usually not pretty. it’s not just products either. Organizations adopt new application frameworks, libraries, languages, and more without doing any security analysis. Even huge new features, like HTML5, show up in our browsers before any serious security work is done. This is a painful path forward, but eventually we hack and patch our way to achieve a “just barely good enough” level of security.

In my youth, I thought that enough design, architecture, and formal modeling could secure anything. But I’ve come to understand that the only way to security is through an evolutionary process with “builders” and “breakers” constantly challenging the status quo. Compliance efforts don’t engender this evolution, which is one reason they are often reviled. What’s not yet clear is exactly what does lead one organization to a great security culture, while another with the same practices struggles and makes little headway.

Safety and security make us take more risks

We put a lot of work into safety and security. But how do we know any “best-practices” are actually making us more secure? Before you answer, you should consider that a number of studies have shown that drivers of vehicles with ABS tend to drive faster, follow more closely, and brake later, accounting for the failure of ABS to result in any measurable improvement in road safety. So we are getting places faster without improving our safety.

This counter-intuitive outcome is called “Risk Compensation” or “Risk Homeostasis.” It turns out that people seem to be inherently wired with a certain level of risk tolerance. Study after study supports this idea. Bike helmets have been show to make cyclists ride faster, even increasing the fatality rate in some studies. Seat belts make you drive less carefully. Ski helmets result in more aggressive skiing. Safer skydiving equipment, children’s toys, even condoms -- all show an almost intentional desire to bring the accident and fatality rates back to their original levels.

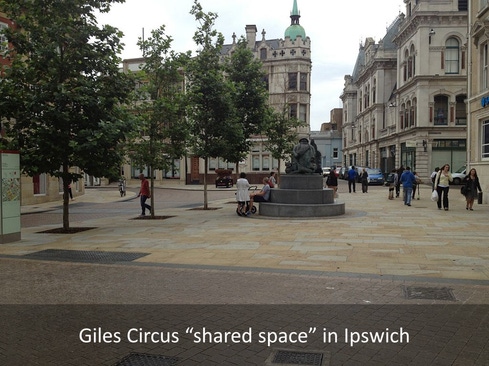

People’s perception is obviously critically important to changing behavior. What do you notice about the pedestrians in the picture below?

Did you notice that those people are walking around without any traffic signs, warning, road markings, curbs, and crosswalks? Security madness! If this were an audit, their area would be shut down and sent for remediation. However, this “shared space” was consciously designed to increase the level of uncertainty for drivers and other road users. Amazingly, this approach has been found to result in lower vehicle speeds and fewer road casualties.

The perception of protection

Wait… What!? Protection technology makes people take more risks? Removing safety markings makes roads safer? Yep. You have to consider the human factor when you’re designing security interventions. Your best intentions could have completely opposite and unintended consequences. For example, maybe your new web application firewall gives developers a false sense of security and they stop doing input validation. Or maybe you rely on automated security testing that has so many false alarms that people start to ignore the results.

These problems start when the perception of security isn’t in balance with the reality of security protections. When perception is greater than the protection, people have a false sense of security and take unnecessary risks. On the other hand, when the protection exceeds the perception, the business will shy away from profitable activities.

Creating a culture of accelerated security evolution with transparency

Ultimately, we all want to achieve that elusive culture that makes security a part of information technology without everybody ending up mad. I think we can all agree that building stuff and trying to make it secure later isn’t the right approach. But neither is blindly following a process model that just aggregates a bunch of guesses about what might work.

The path to security that works is rapid evolution – the “builders” and “breakers” working to push security forward. The key to speeding up this process is to make security transparent, so that the perception of security matches up with the reality. The keys to transparency are:

Starting with a model. You have to start with an “expected model” of what you think your defenses should be. It doesn’t have to be perfect, as your model will evolve over time.

Getting coverage. Verify your expected model across all your applications to an appropriate level of rigor. Establish a security sensor network that can monitor your entire portfolio.

Getting continuous. Security visibility has a terrifically short half-life. Today’s highly accelerated software processes demand real-time feedback to developers and other stakeholders.

If you have processes that aren’t demonstrably effective within this framework, cut them. You’ll get a more effective security program and probably save a lot of time and money. What are your techniques for creating a strong security culture? Let me know how you know it works in the comments! Good luck.

About the Author(s)

You May Also Like

Beyond Spam Filters and Firewalls: Preventing Business Email Compromises in the Modern Enterprise

April 30, 2024Key Findings from the State of AppSec Report 2024

May 7, 2024Is AI Identifying Threats to Your Network?

May 14, 2024Where and Why Threat Intelligence Makes Sense for Your Enterprise Security Strategy

May 15, 2024Safeguarding Political Campaigns: Defending Against Mass Phishing Attacks

May 16, 2024

Black Hat USA - August 3-8 - Learn More

August 3, 2024Cybersecurity's Hottest New Technologies: What You Need To Know

March 21, 2024