How can two organizations with the exact same app security program have such wildly different outcomes over time? The reason is corporate culture.

The kneejerk approach to application security is to start finding and fixing vulnerabilities. The problem with these reactive programs is that they end up being expensive witch-hunts that don’t change the way code is built. Instead, we need to think of those vulnerabilities as symptoms of a deeper problem that lies somewhere in the software development organization.

Over the past 15 years, I’ve worked with a variety of organizations, both large and small, to improve their application security capabilities. One thing I’ve noticed is that two organizations with the exact same application security activities can have wildly different results over time. One organization will improve, steadily stamping out entire classes of vulnerabilities. The other will continue to find the same problems year after year.

The difference is culture. In some organizations, security is an important concern that is considered a part of every decision. In others, security is considered a productivity killer and a waste of time. These "culture killers" will, most certainly, undermine and destroy your application security program. Let’s take a look at the seven most deadly security sins...

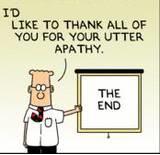

Sin 1: Apathy. In 2002, Bill Gates famously drafted his "Trustworthy Computing" memo in which he makes clear that "Trustworthy Computing is the highest priority for all the work we are doing. We must lead the industry to a whole new level of Trustworthiness in computing." Executives have the power to make security a priority or a joke. What messages are you sending with your security decisions and actions?

Sin 2: Secrecy. Consider the mission of the Open Web Application Security Project (OWASP): "To make software security visible, so that individuals and organizations worldwide can make informed decisions about true software security risks." Making security visible in your organization will ensure that development teams and business organizations are on the same page. Secrecy, on the other hand, leads to confusion, blind decision-making, and can even create disincentives for secure coding. Do you use every vulnerability as an opportunity to improve or as a secret to cover up as quickly as possible?

Sin 3: Forgetfulness. Security is created through the evolutionary process. "Builders" create a security system, and "breakers" challenge that security. Each iteration advances security a little bit. However, many organizations don’t save the improvements made during each iteration. They don’t learn. Instead, their developers continue to make the same mistakes year after year. Organizations that learn from their mistakes capture the lessons they have learned in standards, technical defenses, training, and other forms.

Sin 4: Promiscuity. Some organizations allow development teams to adopt new technologies without any security analysis. This might be an open-source library, a new framework, or a new product. Eventually, vulnerabilities are identified in the new technology, but by then it is either technically or contractually too late to fix. The solution is to ask the hard questions before you get in bed with a new technology. What is the security story behind the technology? How can you verify security? How have the vendors handled security issues in the past?

Sin 5: Creativity. Done right, security is boring. Every time we see custom security controls, we find serious problems. Everyone knows not to build their own cryptography, or at least they should. But the same reasoning applies to authentication, access control, input validation, encoding, logging, intrusion detection, and other security defenses.

Sin 6: Blame. Some people like to blame developers for security flaws. Economic theory suggests that this is the efficient approach, since developers are in the best position to prevent security glitches from occurring. But blaming developers for application security problems is exactly the wrong thing to do. Blame creates a dangerous feedback loop where developers despise security, security teams exaggerate their findings to get attention, and everyone ends up blindly trusting applications. Remember the words of Ice-T, "Don’t hate the playa, hate the game."

Sin 7: Faith. Many organizations rely on a quick scan or static analysis to see if their applications are "good-to-go" without really understanding what they are getting. For example, access control is a critical security defense, but most tools can’t test it at all. Same for many encryption and authentication defenses as well. So you’ll need a different strategy to verify that those controls are in place and effective. Blind faith is not a defense.

Consider your software development culture and ask yourself if you’ve made secure coding simple and fun. Just remember: All the tools and processes in the world won’t lead to secure code unless you tackle the culture killers. Good luck!

About the Author(s)

You May Also Like

Beyond Spam Filters and Firewalls: Preventing Business Email Compromises in the Modern Enterprise

April 30, 2024Key Findings from the State of AppSec Report 2024

May 7, 2024Is AI Identifying Threats to Your Network?

May 14, 2024Where and Why Threat Intelligence Makes Sense for Your Enterprise Security Strategy

May 15, 2024Safeguarding Political Campaigns: Defending Against Mass Phishing Attacks

May 16, 2024

Black Hat USA - August 3-8 - Learn More

August 3, 2024Cybersecurity's Hottest New Technologies: What You Need To Know

March 21, 2024